Module-4: Docker Assignment

1. Explain the difference between Virtualization, Containerization and Microservices.

Ans:

Virtualization:

Definition: Virtualization involves creating a virtual (rather than actual) version of something, such as a server, operating system, storage device, or network resources.

Key Technology: Hypervisors (e.g., VMware, Hyper-V) allow multiple operating systems to run on a single physical machine.

Purpose: Virtualization enables the efficient utilization of physical hardware by creating virtual instances that can run multiple applications or operating systems independently.

Containerization:

Definition: Containerization is a lightweight form of virtualization that encapsulates an application and its dependencies into a container, which can run consistently across different computing environments.

Key Technology: Docker is a popular containerization platform.

Purpose: Containers provide a standardized and portable environment for applications, ensuring consistency from development to testing and production. They are isolated from each other and share the same operating system kernel.

Microservices:

Definition: Microservices is an architectural style where an application is built as a collection of small, independent, and loosely coupled services that communicate with each other through well-defined APIs.

Key Characteristics: Each microservice is a self-contained unit, independently deployable, and can be developed, deployed, and scaled independently of other services.

Purpose: Microservices architecture aims to improve the scalability, flexibility, and maintainability of large, complex applications. It allows teams to work on different services concurrently, enabling faster development and deployment cycles.

2. Define Docker Engine, Docker Image, Docker File, Docker Compose. Describe the lifecycle of Docker Container.

Docker Engine:

Docker Engine is the core of the Docker platform. It is a lightweight and powerful open-source containerization technology that automates the deployment of applications inside containers.

It consists of a server, a REST API, and a command-line interface (CLI) that allows users to interact with Docker.

Docker Image:

A Docker image is a lightweight, standalone, and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools.

Images are often built from a set of instructions called a Dockerfile.

Dockerfile:

A Dockerfile is a plain text file that contains a set of instructions for building a Docker image. It specifies the base image, adds or modifies files, sets environment variables, and runs other commands.

Docker images are created by building a Dockerfile using the docker build command.

Docker Compose:

Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to describe all the services, networks, and volumes required for a complete application stack in a single file called docker-compose.yml.

With Docker Compose, you can start and stop all the services defined in the docker-compose.yml file with a single command.

Now, let's discuss the lifecycle of a Docker container:

Create:

- A Docker container is created from a Docker image using the docker run command. The image contains the application and its dependencies.

Run:

- The container runs in isolation from the host and other containers. It has its own filesystem, processes, and network.

Update:

- If changes are needed in the application or its environment, you can update the Docker image by modifying the Dockerfile and rebuilding it using the docker build command.

Stop:

- The container can be stopped using the docker stop command. This gracefully stops the processes running inside the container.

Start:

- A stopped container can be started again using the docker start command. It resumes the processes from where they were stopped.

Pause/Unpause:

- Docker containers can be paused and resumed using the docker pause and docker unpause commands, respectively.

Remove:

- A container can be removed using the docker rm command. This deletes the container, but not the underlying image.

Destroy:

- If the container is no longer needed and you want to clean up resources, you can use the docker rm command with the -v option to remove the associated volumes as well.

3. Explain the different Docker Components - Docker Client, Docker Host and Docker Registry.

Docker Client:

The Docker Client is a command-line interface (CLI) tool that allows users to interact with the Docker daemon.

It accepts commands from the user and communicates with the Docker daemon to execute those commands.

Users can use the Docker CLI to build, manage, and run containers.

The Docker Client can run on the same machine as the Docker daemon or connect to a remote Docker daemon.

Docker Host:

The Docker Host, also known as the Docker Engine or Docker Daemon, is a background process that manages Docker containers on a given system.

It is responsible for building, running, and managing containers.

The Docker Host communicates with the Docker Client and takes care of tasks such as container creation, starting, stopping, and monitoring.

It also manages the storage, networking, and other essential aspects of containerized applications.

Docker Registry:

The Docker Registry is a service for storing and distributing Docker images.

A Docker image is a lightweight, standalone, executable package that includes all the necessary components (code, libraries, dependencies, and configurations) needed to run a software application.

Docker images are stored in a Docker Registry, and users can pull these images to their local environment to run containers.

The most commonly used public Docker Registry is Docker Hub, but organizations can also set up private registries for storing proprietary or sensitive images.

4. Explain with an example, how you would push a local image to DockerHub and AWS ECR.

Ans:

Pushing a local image to DockerHub:

Installation of Docker on Ubuntu:

sudo apt update sudo apt install docker.io sudo systemctl start docker sudo systemctl enable docker docker --version sudo usermod -aG docker $USER or newgrp docker sudo systemctl status docker groups

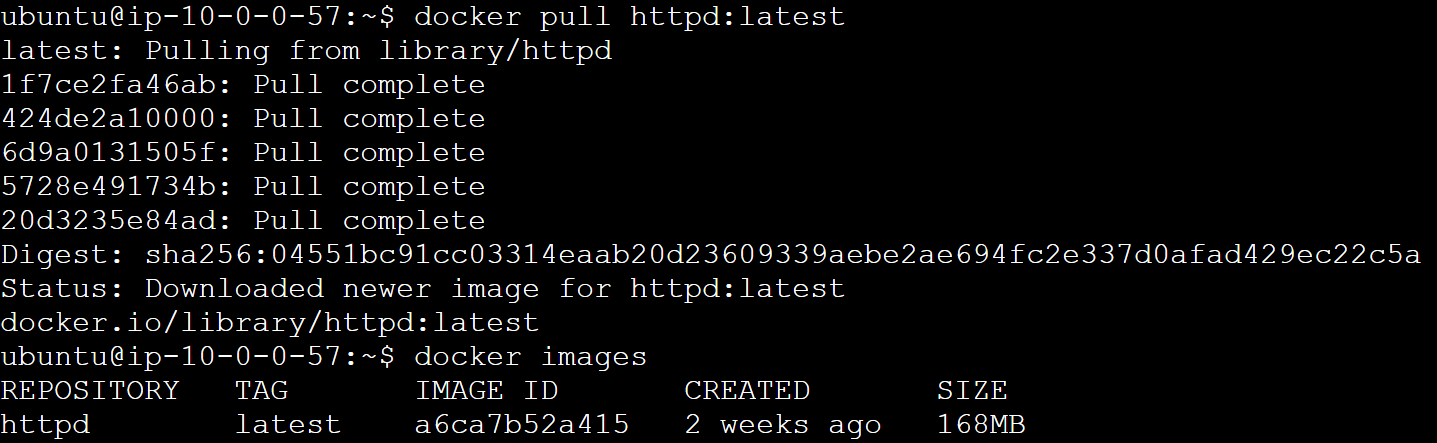

Pull a Docker image as shown below.

Tag your local Docker image and Login to DockerHub using your docker credentials(username and password) as shown below.

Finally, Push the image to DockerHub as shown below.

From the below image, you can observe the docker image has been pushed into the docker hub registry.

Pushing a local image to AWS ECR:

Installation of awscli on Ubuntu:

sudo apt update

sudo apt install awscli

aws configure(using your aws access key and secret key)

Create an Amazon ECR repository: You can do this through the AWS Management Console or by using the AWS CLI. For example, using the AWS CLI:

aws ecr create-repository --repository-name my-httpd

A repository has been created, you can observe in the below image.

Login to AWS ECR (Authenticate Docker to your ECR registry):

aws ecr get-login-password --region ap-south-1 | docker login --username AWS --password-stdin 264638186531.dkr.ecr.ap-south-1.amazonaws.com

Replace your region and your account ID with your AWS region and account ID.

Pull a Docker image as shown below.

Tag your Docker image with the ECR repository URI:

docker tag httpd:latest 264638186531.dkr.ecr.ap-south-1.amazonaws.com/my-httpd:latest

Replace <repository-name> with the name of your ECR repository and <image-tag> with the tag of your Docker image.

Push the image to ECR:

docker push 264638186531.dkr.ecr.ap-south-1.amazonaws.com/my-httpd:latest

You can observe the image has been pushed into the Amazon ECR.

Replace <repository-name> with the name of your ECR repository. Replace your region and <image-tag> with the tag of your Docker image.

Pulling an Image from ECR:

Authenticate Docker to your ECR registry:

aws ecr get-login-password --region ap-south-1 | docker login --username AWS --password-stdin 264638186531.dkr.ecr.ap-south-1.amazonaws.comReplace <your-region> with your AWS region code (e.g., ap-south-1) and <your-account-id> with your AWS account ID.

Pull the image:

docker pull 264638186531.dkr.ecr.ap-south-1.amazonaws.com/my-httpd:latestReplace <repository-name> with the name of your ECR repository and <image-tag> with the tag of the image you want to pull.

The image has been pulled successfully from the Amazon ECR as shown below.

5. Explain the Docker file statements - COPY, ADD, CMD, RUN, ENTRYPOINT.

Ans:

COPY:

Syntax: COPY <source> <destination>

This statement copies files or directories from the host machine (where the Docker build is initiated) to the Docker image. It is commonly used to add application code and resources to the image.

Example:

COPY ./app /usr/src/app

ADD:

Syntax: ADD <source> <destination>

Similar to COPY, but with some additional features. It can copy local files, but it can also fetch files from remote URLs and extract compressed files.

While COPY is recommended for most cases, ADD is more powerful but might have unexpected behavior if not used carefully.

Example:

ADD https://example.com/archive.zip /usr/src/

CMD:

Syntax: CMD ["executable","param1","param2"] (exec form) or CMD command param1 param2 (shell form)

This statement specifies the command to run when a container is started from the built image. It can be overridden by providing arguments at runtime.

If the Dockerfile has multiple CMD instructions, only the last one takes effect.

Examples:

CMD ["npm", "start"]

# or

CMD npm start

RUN:

Syntax: RUN <command>

This statement is used to execute commands during the image build process. It is often used for installing dependencies, updating packages, and making other changes to the system.

Each RUN instruction creates a new layer in the image.

Example:

RUN apt-get update && apt-get install -y curl

ENTRYPOINT:

Syntax: ENTRYPOINT ["executable", "param1", "param2"] (exec form) or ENTRYPOINT command param1 param2 (shell form)

This sets the primary command for the container. It specifies the executable to run when the container starts.

Unlike CMD, the ENTRYPOINT command and parameters are not ignored when the container is run with command-line arguments.

Example:

ENTRYPOINT ["python", "app.py"]

6. Create a Docker Volume Container and attach it to two different Nginx containers.

Installation of Docker on Ubuntu:

sudo apt update sudo apt install docker.io sudo systemctl start docker sudo systemctl enable docker docker --version sudo usermod -aG docker $USER or newgrp docker sudo systemctl status docker groups

To create a Docker volume container and attach it to two different Nginx containers, you can follow these steps:

Create a Docker volume:

docker volume create mynginxvolumeCreate a Docker container for the volume:

docker run -d --name volume-container -v mynginxvolume:/data nginx:latestThis container is solely for managing the volume and doesn't have to run any services.

Create the first Nginx container:

docker run -d --name nginx-container-1 --volumes-from volume-container -p 8080:80 nginx:latestThis command creates an Nginx container named nginx-container-1 and attaches the volume from the volume-container to it. The container's port 80 is mapped to the host machine's port 8080.

Create the second Nginx container:

bashCopy codedocker run -d --name nginx-container-2 --volumes-from volume-container -p 8081:80 nginx:latestThis command creates another Nginx container named nginx-container-2 and attaches the same volume from the volume-container to it. The container's port 80 is mapped to the host machine's port 8081.

Now you have two Nginx containers (nginx-container-1 and nginx-container-2) sharing the same Docker volume (mynginxvolume) through the volume container (volume-container).

Both Nginx containers will have access to the same data in the volume, and changes made in one container will be reflected in the other. This setup is useful for scenarios where you want to share data between multiple containers while maintaining isolation.

7. Create a Docker File for a Python-based flask application. Execute the same using Jenkins Pipeline. You should have a <ip-address>:5000 flask webpage running.

Ans:

Pre-reqs:

Setting up an ec2 instance.

Python 3.8+ - for running locally, linting, running tests etc

Docker - for running as a container, or image build and push

AWS CLI (aws configure) - for deployment to AWS.

Install make

Jenkins set up and installation.

Install the following plugins in Jenkins:

Project Repo: github.com/pavankumarindian/FlaskApp-Deploy..

Configure the docker tool as shown below.

Add docker hub credentials so that the docker image can be pushed or pulled from the docker hub.

Create a pipeline project as shown below.

Configure the pipeline job as shown below.

Click on Build Now. As you can see the pipeline jon run successfully.

You can see below that the docker image is created and pushed in the docker hub registry.

You can verify the deployment of the web app on the ec2 instance as follows:

<public-ip>:5000

8. Create and deploy WordPress application using Docker Compose file. Create a Jenkins Job to execute your docker-compose file and the output should show the IP address of the WordPress application.

Project Repo: github.com/pavankumarindian/docker-compose-..

Prerequisites:

1. Launch EC2 instance (eg: amazon Linux)

2. Install Jenkins.

3. Install Docker and Docker-compose.

4. Install Git.

Launch EC2 instance and Install Jenkins:

Firstly launch an ec2 instance(amazon linux) and install Jenkins on it.

Install Jenkins:

sudo yum update –y

sudo wget -O /etc/yum.repos.d/jenkins.repo \

https://pkg.jenkins.io/redhat-stable/jenkins.repo

sudo rpm --import https://pkg.jenkins.io/redhat-stable/jenkins.io-2023.key

sudo yum upgrade

sudo dnf install java-17-amazon-corretto -y

sudo yum install jenkins -y

sudo systemctl enable jenkins

sudo systemctl start jenkins

sudo systemctl status jenkins

In the "Configure Security Group" step, add a rule to allow incoming traffic on port 8081.

Open the Jenkins systemd service file for editing:

sudo nano /etc/systemd/system/multi-user.target.wants/jenkins.service

Look for the Environment line specifying the JENKINS_PORT. It should look like this:

Environment="JENKINS_PORT=8080"

Change the port number to your desired value. For example: Environment="JENKINS_PORT=8081"

Replace 8081 with the port number you want to use.

Save the changes and exit the text editor.

Reload the systemd configuration:

sudo systemctl daemon-reload

Restart Jenkins to apply the changes:

sudo service jenkins restart

Now Jenkins should be running on the new port. Access it through your web browser using http://<Public-ip-address>:<new_port>.

Install Docker:

sudo yum update -y

sudo yum install docker -y

sudo service docker start

sudo usermod -a -G docker $USER (In my case it is ec2-user)

newgrp docker

docker --version

Install Docker-compose:

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

Install Git:

sudo yum install git

Add your user to the docker group to run Docker commands without sudo:

sudo usermod -a -G docker jenkins

Restart jenkins:

sudo service jenkins restart

Create a pipeline job as shown below.

Configure the pipeline job as shown below.

Run the pipeline job(Build Now) as shown below.

Console output:

You can observe from the below image, that the WordPress application has been deployed successfully on the ec2 machine.

You can check it by entering <public ip>:8080

(Don't forget to enable 8081 port for Jenkins and 8080 for WordPress application in inbound rules of the security group.)

9. Create a Docker Multistage build for a Python-based application.

A Docker multistage build is a technique that allows you to use multiple FROM statements in your Dockerfile. This is particularly useful for optimizing the size of your final Docker image by discarding unnecessary build artifacts from intermediate stages. Below is an example of a Dockerfile for a Python-based application using a multistage build:

# Stage 1: Build stage

FROM python:3.9 AS builder

# Set the working directory

WORKDIR /app

# Copy the requirements file first to leverage Docker cache

COPY requirements.txt .

# Install dependencies

RUN pip install --upgrade pip && \

pip install -r requirements.txt

# Copy the application code

COPY . .

# Stage 2: Production stage

FROM python:3.9-slim

# Set the working directory

WORKDIR /app

# Copy only necessary files from the builder stage

COPY --from=builder /app /app

# Expose the port your application will run on

EXPOSE 5000

# Define environment variables

ENV FLASK_APP=app.py

ENV FLASK_RUN_HOST=0.0.0.0

# Run the application

CMD ["flask", "run"]

In this example:

Stage 1 (builder): This stage is responsible for building the application and installing its dependencies. It uses the python:3.9 image as the base image.

Stage 2: This stage is the production stage. It uses the python:3.9-slim image, which is a smaller base image without development tools and libraries. It copies only the necessary files from the builder stage using the --from=builder option.

Here is requirements.txt file for a Flask application:

Flask==2.1.4

You can customize this file based on the specific dependencies your Python application requires. Make sure to list each dependency on a new line, following the format package-name==version. When you have your requirements.txt file ready, place it in the same directory as your Dockerfile. The Dockerfile's COPY requirements.txt . line will copy this file during the build process.

Remember to adjust the dependencies based on your application's actual requirements.

To build your Docker image using this Dockerfile, you can use the following command:

docker build -t your-image-name .

Replace your-image-name with the desired name for your Docker image. This Dockerfile assumes that your application is a Flask app listening on port 5000. Adjust the Dockerfile according to your specific application requirements.

10. Explain the different Docker networking and the significance of each mode.

Ans:

Docker provides several networking modes to facilitate communication between containers and the outside world. Each networking mode serves a specific purpose, and the choice depends on the requirements of your application. Here are the main Docker networking modes:

Bridge mode:

Description: This is the default network mode. In this mode, each container gets its own internal IP address, and containers on the same bridge network can communicate with each other.

Significance:

Containers can communicate with each other using their internal IP addresses.

You can expose specific ports from the container to the host machine or to the external world.

Example:

docker run --name my_container -p 8080:80 -d my_image

In this example, the container's port 80 is mapped to the host machine's port 8080.

Host mode:

Description: In host mode, a container shares the network namespace with the host machine, using the host's network stack.

Significance:

Containers in host mode have direct access to the host machine's network interfaces.

It can lead to better performance in certain scenarios because there is no network address translation (NAT) overhead.

Example:

docker run --name my_container --network host -d my_image

None mode:

Description: In this mode, a container runs in isolation without any network interfaces.

Significance:

Useful when you want complete network isolation for a container.

You can manually create and attach custom networks or use other means for communication.

Example:

docker run --name my_container --network none -d my_image

Overlay mode:

Description: Overlay networking enables communication between containers across multiple Docker hosts. It is typically used in Docker Swarm mode.

Significance:

Allows creating a virtual network that spans multiple Docker hosts, facilitating container communication in a cluster.

Useful for scaling applications across multiple machines.

Example:

docker network create --driver overlay my_overlay_network

docker service create --network my_overlay_network --name my_service my_image

Macvlan mode:

Description: Macvlan allows you to assign a MAC address to a container, making it appear as a physical device on the network.

Significance:

Useful for scenarios where you need containers to appear as physical devices on the network.

Enables containers to be directly accessible from other devices on the same network.

Example:

docker network create -d macvlan --subnet=192.168.1.0/24 --gateway=192.168.1.1 -o parent=eth0 my_macvlan_network

docker run --name my_container --network my_macvlan_network -d my_image